Docker and Nginx as reverse-proxy to handle server-side tagging.

In this article, you will learn how to implement GTM server side step by step. You get to know server-side tagging using: preview and tagging servers running in a docker environment, as well as Nginx as a proxy server.

The issues addressed in this article:

- What is server-side tagging anyway, and why should it be implemented?

- Configuration on the Tag Manager side

- Nginx as a proxy for docker containers

- Custom behavior of containers launched based on images prepared by Google and how to deal with it?

What is server side tagging and what is it used for - server side tagging in theory

Before the introduction of server-side tagging, only the placement and activation of tags on the browser side of the client device was available. Consequently, we had no way to control what data was being sent and where it was going.

Source of the image: Google Docs → Client-side tagging

Server-side tagging introduces an additional layer of control over the flow of data between the application and tools for e.g. analytics, conversion, advertising.

Source of the image: Google Docs → Server-side tagging

In addition, the undeniable advantages of server-side tagging are:

- improved site performance due to code execution on the server rather than on the client device,

- enabling stricter content security policies → Content Security Policy,

- increased privacy, as you can, for example, remove the IP address from the data sent to endpoints.

Some minor downside is, of course, the cost, since we need to own a server and have a competent person in the team to manage it.

Preparations on the part of the Tag Manager

In this section, you will find out how to get the “server container id” necessary for the server deployment.

- Open Tag Manager main page and choose an option “Create container”.

2. In the next view, one of the visible options at the very bottom of the list is “Server”. We point to it and give it any name. Placeholder prompts that it can be, for example, www.mysite.com — in the case from the screenshot it is given “test”. We click “Create.”

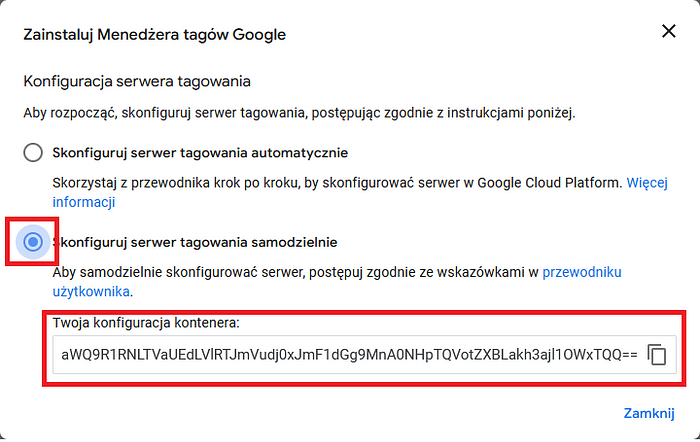

3. A window with two options appears. One of them gives you the option to configure the tagging server yourself. We select it, which results in the appearance of an additional field with a long string. This is just the “server container id”, which will be needed later to configure tagging and preview server containers in the docker.

4. Click the “Close” button in the above window and go to the “Administration” → “Container Settings” tab.

5. In the window, which appears, click the “Add URL” button and enter the subdomain of the container that will act as the tagging server.

6. Then we click the “Save” button located in the upper right corner. That’s it.

Docker container configuration

Here we will move on to present and discuss the docker-compose configuration containing two containers: a preview server and a tagging server.

version: "3.8"

services:

preview-server:

image: gcr.io/cloud-tagging-10302018/gtm-cloud-image:stable

container_name: preview-server

environment:

CONTAINER_CONFIG: ${CONTAINER_CONFIG}

RUN_AS_PREVIEW_SERVER: "true"

ports:

- "8081:8080"

server-side-tagging-cluster:

image: gcr.io/cloud-tagging-10302018/gtm-cloud-image:stable

container_name: server-side-tagging-cluster

environment:

CONTAINER_CONFIG: ${CONTAINER_CONFIG}

PREVIEW_SERVER_URL: ${PREVIEW_SERVER_URL}

ports:

- "8082:8080"

depends_on:

- preview-serverNote that both preview-server and server-side-tagging-cluster services use the same image. However, they differ in some details.

The first service will be used when the user clicks the “Preview” button in Google Tag Manager visible in the upper right corner of the workspace:

It requires setting the environment variable RUN_AS_PREVIEW_SERVER to “true”. The second variable — CONTAINER_CONFIG is common to both services and contains the value “server container id”. It is taken from a separate file, to which we will return a little later.

The second service is responsible for sending the actual data and requires the configuration of the PREVIEW_SERVER_URL variable. It should be started only after the “preview-server” is fully operational. Google recommends that these two services should be configured under separate subdomains, and indicates, that each should be available in the application’s domain and have access using the https protocol.

Each service has its own distinct name, which is useful for searching in the list of running containers, especially if the server-side tagging implementation includes more than one application. The indicated ports will later be used in the Nginx configuration.

Environment variables in an external file

The containers mentioned in the previous section use an external .env file located in the project’s root directory along with the docker-compose.yml file. Its structure is as follows:

CONTAINER_CONFIG=server-container-id-from-tag-manager

PREVIEW_SERVER_URL=https://preview.example.comWe expose everything to the outside world - Nginx as a reverse proxy

With the docker-compose.yml and .env files prepared, we start configuring Nginx, which will act as a proxy for the two services discussed earlier — the preview server and the tagging server.

In a previous article, I described the case of a dockerized Nginx acting as a proxy server. This time, Nginx appears as a separate instance rather than a docker container.

We create two files. Let’s call them preview.example.com corresponding to the preview server configuration and sst.example.com for the tagging server. Each will differ only in the subdomain name specified and the next port passed in the “proxy_pass” directive. We distinguish two “server {}” blocks. Below is the first one:

server {

server_name preview.example.com www.preview.example.com;

return 301 https://$host$request_uri;

}Configuration for port 80. According to the Nginx documentation, you do not need to explicitly specify it in the “listen” directive:

“If the directive is not present then either *:80 is used if nginx runs with the superuser privileges, or *:8000 otherwise. “

The second block is more complex:

server {

listen 443 ssl http2;

server_name preview.example.com www.preview.example.com;

# SSL

ssl_trusted_certificate /etc/letsencrypt/live/preview.example.com/chain.pem;

ssl_certificate /etc/letsencrypt/live/preview.example.com/fullchain.pem;

ssl_certificate_key /etc/letsencrypt/live/preview.example.com/privkey.pem;

include snippets/ssl-config.conf;

# CONFIGS

include snippets/secure-headers.conf;

# CUSTOMIZATIONS

location / {

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Host $http_host;

proxy_set_header X-Forwarded-Proto $scheme;

proxy_pass http://localhost:8081;

add_header X-Robots-Tag "noindex, nofollow";

}

}Analyzing the structure of this block one by one, at first we indicate on which port, for which subdomain Nginx should listen, and specify that traffic should be encrypted and use http2. Next, in the “# SSL” section, we set paths to certificates and configure ssl, and in “# CONFIGS” secure headers.

- The “location {}” block contains the correct configuration to allow Nginx to act as a proxy.

- The “proxy_set_header Host” directive sets the “Host” header in the HTTP request passed to the backend to the value “$host” storing the host name from the original client request or the name from the “server_name” from the configuration above.

- The directive “proxy_set_header X-Real-IP” stores the ip address of the client that established a connection to the Nginx server.

- The “proxy_set_header X-Forwarded-For” directive contains the ip address of the client connecting to the Nginx server along with the addresses of the proxy servers.

- The “proxy_set_header X-Forwarded-Host” directive contains the host name from the original request along with the names of the proxy servers.

- The “proxy_set_header X-Forwarded-Proto” directive contains the http/https protocol that was used in the client request.

- The “proxy_pass” directive specifies where Nginx should redirect requests.

- The “add_header X-Robots-Tag” directive adds a header to the HTTP response sent to the client. The value “noindex, nofollow” informs indexing robots that the page does not allow indexing and link tracking.

Nginx — secure headers, ssl configuration and certificates

Above we focused on disassembling the “server {}” blocks, but we only mentioned about ssl configuration and secure headers.

Below is the ssl-config.conf file:

ssl_session_timeout 1d;

ssl_session_cache shared:MozSSL:10m;

ssl_session_tickets off;

ssl_protocols TLSv1.2 TLSv1.3;

ssl_ciphers ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES256-GCM-SHA384:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-CHACHA20-POLY1305:ECDHE-RSA-CHACHA20-POLY1305:DHE-RSA-AES128-GCM-SHA256:DHE-RSA-AES256-GCM-SHA384;

ssl_prefer_server_ciphers off;

ssl_dhparam /etc/letsencrypt/ssl-dhparams.pem;

# OCSP stapling

ssl_stapling on;

ssl_stapling_verify on;The file was created using the Mozilla SSL Generator to ensure the appropriate level of security of the set connection.

The second file — secure-headers.conf — contains headers designed to increase the security level of the web application:

# HSTS

add_header Strict-Transport-Security "max-age=31536000" always;

# XSS Protection

add_header X-XSS-Protection "1; mode=block";

# Clickjacking

add_header X-Frame-Options "SAMEORIGIN";

# X-Content Type Options

add_header X-Content-Type-Options nosniff;

# Secure Cookie

add_header Set-Cookie "Path=/; HttpOnly; Secure";- Strict-Transport-Security forces the client to use the https protocol to connect to the application.

- X-XSS-Protection protects against Cross Site Scripting attacks.

- X-Frame-Options protects against clickjacking attacks.

- X-Content-Type-Options protects against MIME sniffing attacks.

- The Set-Cookie header in such a configuration means that cookies are available in all paths on the page, are not accessible to JS scripts and are only sent over secured HTTPS connections.

Having a ready-made Nginx configuration for both subdomains, first comment out the lines “listen”, “ssl_trusted_certificate”, “ssl_certificate”, “ssl_certificate_key”, since the certificates will only be generated, and then reload the Nginx configuration with the command:

systemctl reload nginx.serviceNow run the following command to obtain Lets Encrypt certificates for the subdomains under which the containers will run:

certbot --nginx -d preview.example.com -d www.preview.example.comAnd then uncomment the lines mentioned above and reload the Nginx configuration. When the Nginx configuration is complete you can start docker containers by executing the command from the project root directory:

docker-compose up -dNon-standard docker containers behavior….

Several minutes after the launch, the status of the containers changed to “unhealthy.” The information obtained from Google engineers makes it possible to claim that “unhealthy” does not mean that the container is not working, but that the software suggests a restart, e.g. to download a newer version of additional modules. This information forced the creation of a bash script that runs periodically to check if there are any containers with the above status and, if so, to restart them. Below is the code:

#!/bin/bash

# Script finds out which container has unhealthy status and restarts it

for container_id in $(docker ps -q -f health=unhealthy)

do

echo "Restarting unhealthy container: $container_id"

docker restart $container_id

doneOnce the file is saved, it should be given execution privileges for the user with the command:

chmod 700 script.bashAnd then you can add a line to the cron table, such as:

*/1 * * * * /path/to/script.bashThus, the script will be executed every minute and will restart the “sick” container if necessary. 🙂

The confidence that everything is working as it should is priceless

Whether everything is working can be checked in several ways. One is to check in the log — display the logs with the docker compose logs service-name command and see if lines similar to the following appear:

server-side-tagging-cluster-poc | Your tagging server is running on the latest version.

server-side-tagging-cluster-poc | ***Listening on {"address":"::","family":"IPv6","port":8080}***

server-side-tagging-cluster-poc | Sending aggregate usage beacon (see https://www.google.com/analytics/terms/tag-manager/): https://www.googletagmanager.com/sgtm/a?v=s1&id=GTM-XXXYYYZZZ&iv=21&bv=3&rv=39k0&ts=ct_http!400*1.d_node-ver!18*1&cu=0.28&cs=0.13&mh=9277824&z

server-side-tagging-cluster-poc | Sending aggregate usage beacon (see https://www.google.com/analytics/terms/tag-manager/): https://www.googletagmanager.com/sgtm/a?v=s1&id=GTM-XXXYYYZZZ&iv=21&bv=3&rv=39k0&ts=ct_http!400*1&cu=0.25&cs=0.02&mh=10881248&zThe second way is to run the docker-compose ps command and see if the “State” column shows “Up (healthy).”

The third way is to check the endpoint “/healthz”. If it displays “ok” in the browser, that definitely means it’s OK. Alternatively, you can use the cURL tool and the command curl https://preview.example.com/healthz.

What if I want a cluster that supports tagging?

This is of course possible. Then it is enough, even on a separate physical machine, to run further (one or more) tagging server containers, create further subdomains for them, analogous Nginx configuration and assign them the same “server container ID” used in the first instance.

Summary: server side tagging using Docker and Nginx — what do you definitely need to know?

Implementing server-side tagging on a docker using Nginx and Docker Compose can be a problematic process, but it can also be surprising, especially for those who have no previous experience with it. That’s why in the article we showed how to do it step by step. Before starting the implementation, it is useful to understand what server-side tagging is in general and why it is so crucial. It’s also not insignificant how to deal with the configuration of individual services within Nginx and Docker Compose software. Certain issues can also surprise us after a successful deployment, so proper preparation is the key to success in this case.